Artificial intelligence is revolutionizing industries, with machine learning playing a central role. But as machine learning systems integrate further into critical applications, their vulnerabilities are becoming more evident. Adversarial attacks, which use carefully crafted inputs to mislead these systems, pose a growing threat.

Quantum machine learning represents a promising leap forward, offering the power of quantum computing to solve highly complex problems. Despite its potential, quantum machine learning is not immune to the adversarial challenges that plague classical systems. Fraunhofer AISEC has taken a deep dive into this issue with a groundbreaking study comparing the adversarial robustness of quantum and classical machine learning models.

This research provides a unique perspective on how adversarial attacks affect these two types of systems differently. It introduces innovative methods to assess and improve the security of quantum systems while offering insights into making models more robust using advanced techniques like regularization. These findings are essential for IT professionals, engineers, and decision-makers who aim to secure their AI systems against emerging threats.

Understanding the Key Concepts – Adversarial Attacks and Quantum Machine Learning

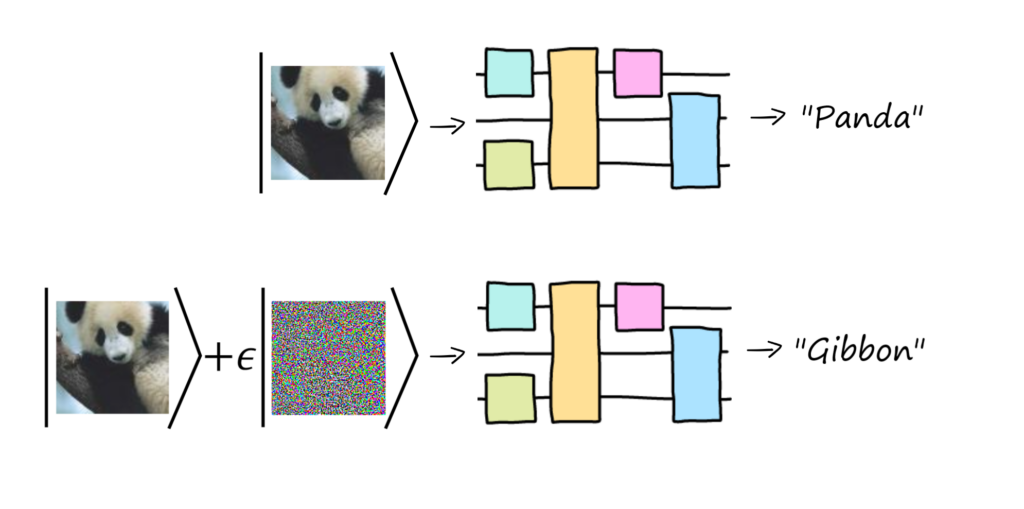

Adversarial attacks exploit weaknesses in machine learning models by making subtle, targeted modifications to input data. Though often imperceptible to humans, these changes can cause models to misclassify the data. For example, an attacker might alter a few pixels in an image to trick a ML model into thinking a stop sign is a yield sign. An example of an adversarial attack in Figure 1 shows the image of a panda. By adding a small noise to each pixel, the original image is no longer correctly classified by the ML model as a ‘panda’, but incorrectly as a ‘gibbon’. (The noise is magnified in the figure such that it becomes visible.) Such vulnerabilities are not theoretical but have significant implications for various industries like healthcare, finance, and autonomous systems.

Figure 1: Manipulation of a quantum ML model by an adversarial attack leads to misclassified information. By adding a small noise to each pixel, the model incorrectly classifies the image of a panda as a »gibbon«.

Quantum machine learning is a rapidly growing field that combines insights from classical ML with quantum mechanical effects such as superposition and entanglement of quantum states to process data. Unlike classical systems, which rely on traditional computing methods, quantum systems use latent quantum Hilbert spaces to perform operations. This gives them the potential to outperform classical systems in certain tasks. However, quantum systems face similar security risks, making it crucial to understand and address vulnerabilities known from classical AI research.

Methodology: How the Study Was Conducted

Fraunhofer AISEC’s research evaluated several machine learning models, both quantum and classical, to understand their susceptibility to adversarial attacks. The study »A Comparative Analysis of Adversarial Robustness for Quantum and Classical Machine Learning Models« focused on quantum models that used advanced encoding techniques, such as re-upload and amplitude encoding, as well as classical models, including a convolutional neural network and a Fourier network. The Fourier network is particularly interesting because it mathematically mimics certain aspects of quantum models, acting as a bridge between quantum and classical approaches.

To compare these models, we designed a special dataset and applied a technique known as Projected Gradient Descent to generate adversarial examples. These examples were then used to evaluate each model’s robustness. The study also examined how adversarial vulnerabilities transfer between quantum and classical models, shedding light on their similarities and differences. Additionally, regularization techniques were applied to quantum models to enhance their resilience to attacks, and the effectiveness of these techniques was rigorously tested.

More specifically, we implemented Lipschitz regularization for quantum models, particularly those using re-upload encoding. In the study, this concept plays a central role in understanding how models respond to adversarial perturbations. Lipschitz bounds quantify how much a model’s output can change concerning small changes in its input. Lower Lipschitz bounds signify that a model is less sensitive to such changes, making it inherently more robust against adversarial attacks.

Key Findings

1. Adversarial Vulnerabilities Exist Across Models

Both quantum and classical models showed susceptibility to adversarial attacks. For instance:

- Classical ConvNets were vulnerable to attacks but showed localized perturbation patterns.

- Quantum models exhibited more scattered attack patterns, particularly those using amplitude encoding.

2. Transfer Attacks Highlight Model Weaknesses

Transferability of attacks between quantum and classical models revealed important insights:

- Fourier networks acted as a bridge, transferring attacks effectively across quantum and classical boundaries.

- Quantum machine learning models struggled with internal consistency in adversarial patterns, depending on the embedding technique used.

- Generally, quantum models did not exhibit higher intrinsic robustness, as attacks from classical models also transferred to the quantum domain.

3. Regularization Boosts Robustness

Applying regularization to quantum models significantly improved their resistance to adversarial attacks. The method reduced noise in attack patterns, focusing the model on meaningful features, and lowered theoretical vulnerability as measured by Lipschitz bounds.

How to Make Your Quantum AI Model More Robust Against Adversarial Attacks

Building on the findings about adversarial vulnerabilities and robustness in machine learning models, it is essential to explore practical methods to defend against these attacks. One valuable resource for implementing such strategies can be found in our tutorial on »Adversarial attacks and robustness for quantum machine learning«, which provides actionable insights to enhance the security of both quantum and classical AI models.

The key to robustness lies in understanding the model’s behavior under adversarial stress and incorporating defenses during training. For quantum models, as well as classical ones, adversarial robustness can be improved through strategies such as adversarial training and regularization. Adversarial training is an easy-to-implement solution for improving robustness; it involves exposing the model to adversarial examples during its learning phase so that it can adapt to these challenges and exhibit a higher level of resilience when encountering similar attacks in real-world scenarios. More rigorous claims of robustness can be made when restricting the Lipschitz bound of the model’s encoding Hamiltonians, via Lipschitz regularization of the quantum model as described earlier.

Our tutorial also emphasizes the role of transfer attack analysis as a diagnostic tool. By examining how adversarial examples generated for one model affect others, it is possible to identify weaknesses that are shared or unique across architectures. This is particularly useful for organizations that employ hybrid systems combining quantum and classical models, as it helps in identifying common defensive measures.

Finally, visualization tools and interpretability techniques, such as saliency maps, provide insights into how adversarial attacks alter input features and which regions of the input are considered most critical by a given (quantum) model. This information is invaluable for refining training strategies and ensuring that defenses are targeted effectively.

Conclusion: Building Resilient AI Systems

Our comparative analysis reveals that both quantum and classical machine learning models share vulnerabilities to adversarial attacks, but they also have unique characteristics that influence their robustness. The study highlights the importance of advanced techniques, such as transfer attack analysis and regularization, in enhancing the security of machine learning systems.

The practical approach to defending against adversarial threats complements the insights from Fraunhofer AISEC’s research, offering a comprehensive framework for improving AI security in both classical and quantum domains. By integrating these advanced strategies, organizations can significantly enhance the robustness of their (quantum) machine learning systems.

Furthermore, our research raises important questions for future exploration. As quantum machine learning continues to evolve, it will be critical to refine these defensive techniques and expand their applicability to more complex and large-scale problems. Understanding the theoretical and practical differences between quantum and classical models will play a central role in developing innovative machine learning systems that incorporate security by design.

Fraunhofer AISEC remains at the forefront of this field, providing valuable insights and solutions to safeguard next-generation AI systems. Collaborating with institutions like Fraunhofer can help organizations address the challenges of securing their AI technologies against future adversarial threats. For those looking to enhance the security of their systems, now is the time to take action and engage with experts in this transformative field.

Authors

Maximilian Wendlinger

Maximilian Wendlinger is a research associate at Fraunhofer AISEC interested in the applications of cybersecurity in the era of Artificial Intelligence and Quantum Computing. His main focus lies on the vulnerability of Machine Learning systems to adversarial attacks and possible techniques to increase model robustness.

Kilian Tscharke

Kilian Tscharke has worked at Fraunhofer AISEC since 2022. As part of the Quantum Security Technologies (QST) group he specializes on Anomaly Detection using Quantum Machine Learning. Part of his work is embedded in the Munich Quantum Valley, an initiative for developing and operating quantum computers in Bavaria. Here, he focuses on Quantum Kernel methods like the Quantum Support Vector Machine that use a quantum computer for the calculation of the kernel.

Contact: kilian.tscharke@aisec.fraunhofer.de