Why certify your cloud service in the first place?

The trend of companies using the cloud is not new – but still growing. Even small and medium-sized businesses are increasingly considering using the cloud, mainly for cost reasons[4].

The question arises why a company would want to have its cloud service certified anyway. We have identified the following three main reasons:

- Customer trust: A company can build trust with its customers by demonstrating that it adheres to common standards in the field, e.g., by featuring the respective certification labels on its website.

- Contractual duties: A common reason for certification today is that a contractor insists that its partner be compliant with a certain certification catalog.

- Regulatory intervention: National or supranational (e.g., the EU) legislation requiring companies in certain domains to comply with respective standards. We will take a closer look at the European certification ecosystem in the following section.

Standardization in the EU

We are shifting to a new way of doing security certification in the EU. Currently, the situation is a patchwork among European nations. Each nation has its own standardizations for certain domains. In Germany, for example, there is the Cloud Computing Compliance Criteria Catalogue (C5) addressing the cloud domain or the Medizinproduktegesetz (MPG)[5] addressing medical devices.

While the latter has already been officially replaced by the new European-wide MDR (Medical Devices Regulation)[6], the C5 is still the standard for cloud computing in Germany. This is likely to change. There is a new European candidate in the pipeline, the European Cybersecurity Certification Scheme for Cloud Services (EUCS).

There are two main drivers bringing the EUCS into play: The EU Cybersecurity Act[7] and the EU Cyber Resilience Act[8].

The Cybersecurity Act went into effect in 2019 and sets up a uniform framework for security certifications. Based on Article 48, the European Union Agency for Cybersecurity (ENISA)[9] proposes a candidate scheme, the EUCS. This is a security catalog for cloud services. In fact, one of its main references is the C5. As in the medical field, the goal is to replace national standards with the EUCS.

However, it is not mandatory to become certified – at least not yet. In the EU, the CE label on (physical) products indicates that these “meet EU safety, health, and environmental protection requirements.” Under the proposal for the EU Cyber Resilience Act (CRA), efforts are being made in the EU to apply this approach analogously to digital products as of 2025. The definition of such products is that they have at least some “digital elements.” Thus, they can be software-only or hardware products. These labels are intended to build trust, strengthen security in EU products, and provide more transparency to EU citizens. The objectives in the proposal, including showing compliance with a security framework and being transparent to customers, are already a good indicator that cybersecurity certifications are part of the bigger picture. In addition, the proposal aims to interact with other EU regulations, e.g., the EU Cybersecurity Act. And as we have just learned, this act proposes the EUCS in turn.

The certification process today (overview)

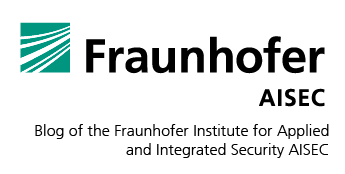

Let us look at the procedure of becoming certified. For demonstration purposes, we will use a running example. A global company, HyperIoT, operates an industrial IoT cloud service. To ensure the resilience and availability of services in different countries, they utilize various public cloud providers, including Microsoft Azure and Amazon Web Services (AWS). These providers offer a multitude of resources, such as virtual machines (VMs), storages, networks, and functions, with each provider having tens of thousands of these resources.

There are many logistics companies that are convinced of the service’s benefits for improving their productivity in asset tracking and fleet management. However, their policies require HyperIoT to be compliant with EUCS, e.g., to be sure they process data in a secure way. A certification process could take place as follows:

Since the certification includes information security aspects, the person in charge is HyperIoT’s chief information security officer (CISO).

To obtain the certification, an audit has to be conducted. In order to ensure a successful audit and manage the extensive documentation involved, it is advisable to seek third-party expertise. To assist in gathering the necessary information, evidence, and documents for the audit, as well as ensuring the accurate completion of paperwork, HyperIoT hires consultants.

Since the CISO cannot handle this alone, more employees are involved for support. The CISO, consultant, and other involved employees are in constant communication and meet on a regular basis to discuss further actions and what has to be improved.

When all necessary evidence has been gathered and the documents are ready, it is time to conduct the actual audit. For this purpose, an auditor visits the company and checks the collected information, e.g., policy documents and a sample of resource configurations.

Can the audit process be automated?

Typically, the audit preparation and the audit itself do not involve much automation. In fact, a common way to organize the audit preparation is to collect evidence in large Excel spreadsheets. While many certification requirements make it difficult to introduce automated evidence collection and assessment, more recent standards, such as the upcoming EUCS, even specifically require the automated monitoring of some technical requirements. We firmly believe that incorporating automation is crucial:

- Audit preparation is an error-prone task. There are many employees involved, so the human factor is involved as well. Collecting a large amount of evidence and entering it into Excel spreadsheets is a task that is sure to lead to errors made by humans.

- It comes with high costs. As seen in the image above, the audit preparation takes about 3 to 5 months, in which the employees involved must look for and provide evidence. Thus, the company spends a lot of money on document acquisition since employees must work on these tasks instead of doing their regular work.

- It is not future-ready for upcoming EU regulations. The biggest downside of this manual approach has already been mentioned, namely the regulatory situation with CRA. In the current version of EUCS, some requirements demand the continuous monitoring of cloud resources. The fact that certifications granted in the EU will play an important role in the CRA is generally agreed upon among experts in the industry. Even if the CRA did not include the EUCS, this scheme will likely replace national certification catalogs; this means that German companies that are compliant with the C5 today will most likely seek to be compliant with the EUCS “tomorrow.” Returning to the subject, the requirement of “continuous monitoring” of a company’s assets would make an audit preparation in the classic sense, a one-time manual preparation, simply impossible.

- “Sleep factor” of the CISO (as we call it). A non-negligible fact we identified in our projects and when talking to CISOs is that they want to have a holistic view of their current (multi-)cloud setup at any time. Manually collecting documents for an audit that takes place every two years is not helpful in a world of fast changing cloud systems. Automated processes allow CISOs to sleep well since they know the company is audit-ready at any time.

Automation is thus not only practical for improving the security posture and saving costs; it will likely also be required in the future. And that is where the Clouditor comes into play. However, the entire certification process cannot be automated. For example, as of today, the actual audit still has to be performed by a verified auditor. We cannot eliminate the complete preparation process either, since there is always evidence that cannot be obtained automatically. Consider “physical” security, for example. However, even for these cases, we have a (not fully automated) solution in mind; more about that later.

Our solution: The Clouditor

We are building the Clouditor to automate audit preparation as much as possible. CISOs should be able to take advantage of continuous monitoring to avoid high personnel costs and human error. And, of course, to assure that the system’s compliance is audit-ready at any time.

As described above, we cannot retrieve all kinds of evidence automatically. However, for technical evidence that can be retrieved automatically, the Clouditor is the right solution. In the following, we briefly describe the architecture and functionality of our tool, accompanied by our running example.

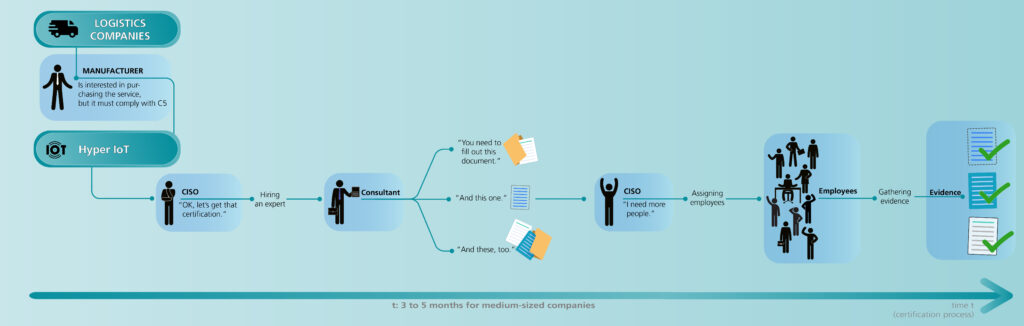

The Clouditor follows a microservices architecture. In the following picture, you can see a simplified view of the Clouditor, in which each oval box represents a microservice:

Using the APIs of the respective cloud systems or of instantiations of the container orchestration platform Kubernetes[10] (top of the picture), the Clouditor checks the configurations of the resources deployed in these systems. This task is performed by a system-specific discoverer. The collected information (configurations) is then stored as evidence. This evidence has a unified format that is based on an ontology for describing cloud resources; see also the work of Banse et al.[11]. This abstraction allows rules to be defined for it without knowing the specific details of the cloud systems (since each cloud, of course, has its own way of defining its resources). Thus, for a new cloud connection (e.g., to the Google Cloud[12]), we would build a new discoverer that uses the respective API, converts the information into the ontology-based evidence format, and send it to the assessment component. In our example, HyperIoT uses hundreds of VMs, distributed across AWS and Azure. Both of our cloud discoverers, namely AWS Discoverer and Azure Discoverer, retrieve the configurations for each VM in the respective cloud and transform it into our evidence format, which is then forwarded. An example of such evidence can be seen in the following:

{

“id”: “83f0ef13-1341-4704-89f3-c314a1b56079”,

“name”: “Location Tracker”,

“type”: “VirtualMachine”,

“resource”: {

“automaticUpdates”: {

“enabled”: true

},

“malwareProtection”: {

“enabled”: true

},

…

}

The assessment component then evaluates the incoming evidence according to a set of rules. As of today, standards are written in natural language. Therefore, “metrics” are used in the domain of cloud certifications. Here, metrics are technical rules extracted from the requirements of the respective catalogs that measure the properties of a cloud resource (the evidence). You can see it as a machine-readable representation of a requirement, where one requirement is bundled in one or more metrics. These metrics adhere to the format of the ontology, making it consistent with the retrieved evidence sent by the discovery components. There is a movement to try to use a more machine-readable approach, see OSCAL[13], but we are not there yet. Consequently, one of our main tasks is to define such metrics using a catalog in natural language. As an example, here is a simple rule measuring if automatic updates are enabled (written in the policy language Rego[14]):

compliant if input.automaticUpdates.enabled == true Now you can see how the ontology prescribes the way an AutomaticUpdate feature has to be set by the discoverer (creating the evidence) so that the assessment can apply the metric to it (using dot notation).

The results of the rules applied to the evidence are the assessment results, and these are sent to the orchestrator. The orchestrator is the heart of the Clouditor, because it is the interface to the outside world, internally controls the components, and stores the necessary information (metrics, assessment results, and catalogs) in a database.

For data protection reasons, the assessment forwards the evidence to the evidence store. The idea of separating the evidence store from the orchestrator arose when we thought about how the Clouditor could be offered. In the case of a cloud-hosted or a software-as-a-service solution, Clouditor users could deploy the evidence store on premises to keep the evidence in their hands.

Finally, we want the user to be able to inspect the evidence, assessment results, etc. in one unified view. Therefore, it is possible to see the whole state of the system on the dashboard. In case of non-compliance, you can follow the path to evidence that is misconfigured and make corrections accordingly. There is also the command line interface (CLI), which you can use to interact with the Clouditor on the terminal instead of a GUI. Since we want to support the whole certification process in the future, we are also conducting research on the automation of certificate maintenance in the context of the project MEDINA[15]. Hence, we have already prepared the storage and view of certificates as well.

The future Clouditor: Next steps

We are currently working on improving Clouditor’s support for the certification process:

- Support of more (cloud) systems, e.g., the Google Cloud and Office 365 Security Data

- Support of more catalogs, e.g., addressing domain-specific regulations such as the Medical Devices Regulation (the legal framework for medical devices within the EU)

- A result document indicating which requirements are already fulfilled and which are not. This document then can be inspected by the auditor conducting the audit.

- The possibility to evaluate requirements not covered by the Clouditor itself – either manually via UI (with evidence, of course) or via external tools, e.g., business processes via provided APIs.

The last step would enable the Clouditor to take as much work as possible from users, leaving them as little manual work as possible.

Conclusion

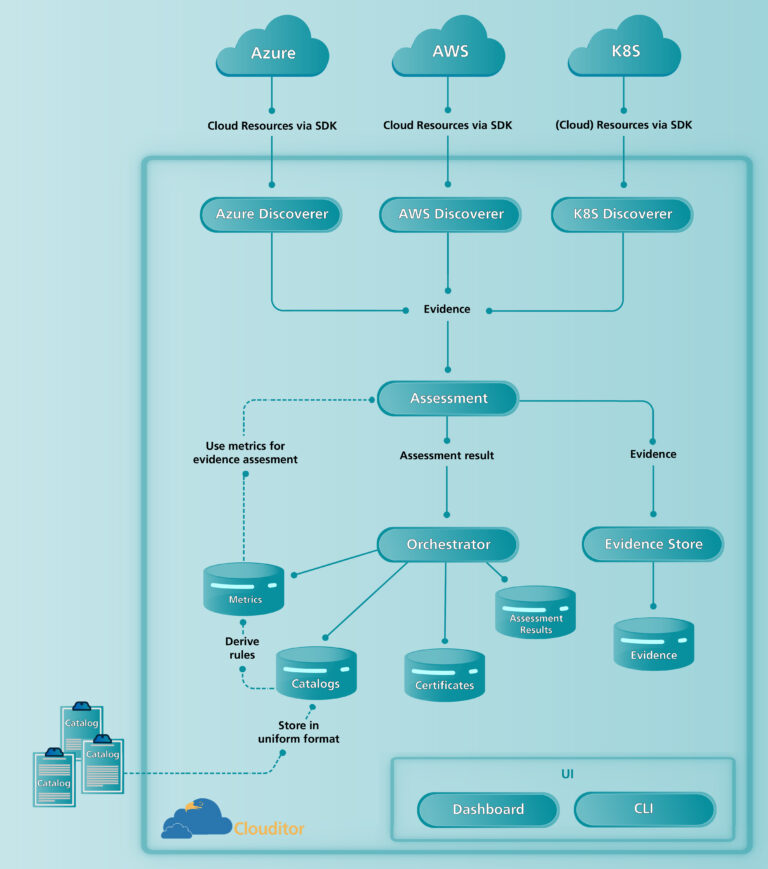

Doing certifications can be time-consuming and error-prone. We are building a solution to improve the audit preparation by adding automation. Today, almost the whole company certification process is still done manually, i.e., many employees are involved.

Compare the process of today (see picture above) with the process using Clouditor:

With our solution, you can automatically check the configurations in cloud systems or systems using Kubernetes. You can see which resources are deployed and what their compliance status is according to the chosen catalog. There will still be some tasks that can only be performed manually (top right corner). However, with Clouditor, the number of these manually performed tasks decreases significantly, and we relieve employees of tasks that can be done automatically. We also plan to integrate external tools to increase the coverage of cloud catalogs even more.

Note that an audit has to be carried out frequently (e.g., yearly), which always consumes a lot of time when everything is done manually. As you can see, using the Clouditor can save a lot of time and resources as well as reduce human errors and, hopefully, lets the CISO sleep well.

If you want to learn more details, either visit our GitHub page[16] to browse our freely accessible open-source repository or simply contact us. You are also very welcome to contact us if you have any suggestions for improvements.

Sources

[1] https://www.bsi.bund.de/dok/13368652

[2] https://www.enisa.europa.eu/publications/eucs-cloud-service-scheme

[3] https://cloudsecurityalliance.org/research/cloud-controls-matrix/

[4] https://info.flexera.com/CM-REPORT-State-of-the-Cloud

[5] https://www.gesetze-im-internet.de/mpg/

[6] https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=uriserv:OJ.L_.2017.117.01.0001.01.ENG

[7] https://digital-strategy.ec.europa.eu/en/policies/cybersecurity-act

[8] https://digital-strategy.ec.europa.eu/en/library/cyber-resilience-act

[9] https://www.enisa.europa.eu/

[11] C. Banse, I. Kunz, A. Schneider and K. Weiss, “Cloud Property Graph: Connecting Cloud Security Assessments with Static Code Analysis,” 2021 IEEE 14th International Conference on Cloud Computing (CLOUD), Chicago, IL, USA, 2021, pp. 13-19, doi: 10.1109/CLOUD53861.2021.00014.

[12] https://cloud.google.com/

[13] https://pages.nist.gov/OSCAL/

[14] https://www.openpolicyagent.org/docs/latest/policy-language/

Author

Nico Haas

After completing his Master’s degree in Computer Science at the University of Stuttgart, Nico Haas joined the Service and Application Security department at Fraunhofer AISEC in 2021. His primary work involves research and development in the area of cloud security, with a specific focus on Continuous Cloud Security Monitoring and Certification.

Contact: nico.haas@aisec.fraunhofer.de